Operating System Short Notes

Chapter 1: Basic concepts of Operating System

Operating System

An operating System is a program that manages a computer’s hardware. It also provides a basis for application programs and acts as intermediary between the computer user and the computer hardware.

A computer system can be divided roughly into four components:

- Hardware: CPU, Memory, Input/Output, Devices, etc.

- Operating System: Controls the hardware and coordinates its use among the various application programs for the various users.

- Application Programs: Word Processors, Spreadsheets, Compilers and Web Browsers

- Users

Two view points of the operating systems:

- User View: According to user perspective like – resource utilization, ease of use, etc.

- System View: Computer’s point of view – resource allocator, Control Program, etc.

Firmware

Bootstrap Program, typically stored within the computer hardware in read-only memory (ROM) or electrically erasable programmable read-only memory (EEPROM), known by the general term firmware.

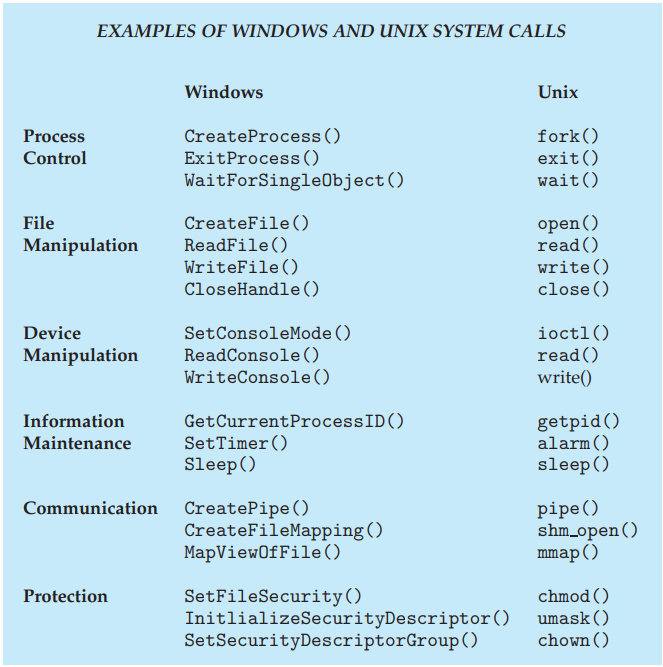

System Call or Monitor Call

Software may trigger an interrupt by executing a special operation called a system call (also called a monitor call).

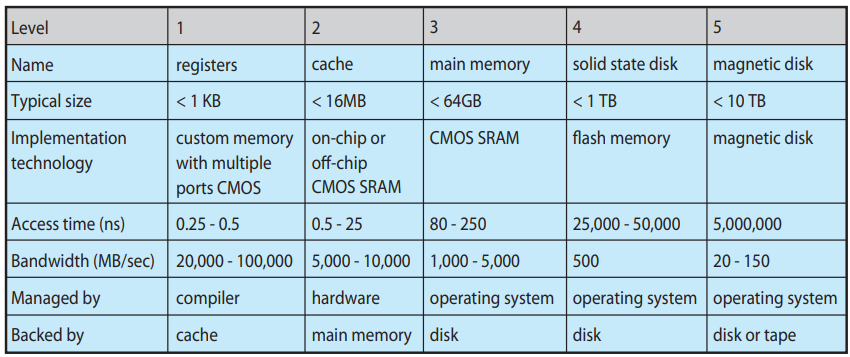

Storage-device hierarchy

- Registers

- Cache

- Main memory

- Solid-state disk

- Magnetic disk

- Optical disk

- Magnetic tapes

Single-Processor Systems

On a single processor system, there is one main CPU capable of executing a general-purpose instruction set, including instructions from user processes.

Multiprocessor Systems

multiprocessor systems (also known as parallel systems or multicore systems) have begun to dominate the landscape of computing. Such systems have two or more processors in close communication, sharing the computer bus and sometimes the clock, memory, and peripheral devices. Multiprocessor systems first appeared prominently appeared in servers and have since migrated to desktop and laptop systems. Recently, multiple processors have appeared on mobile devices such as smartphones and tablet computers.

Multiprocessor systems have three main advantages:

- Increased throughput

- Economy of scale

- Increased reliability

Clustered Systems

A clustered system, which gathers together multiple CPUs. Clustered systems differ from the multiprocessor systems. They are composed of two or more individual systems—or nodes—joined together. Such systems are considered loosely coupled. Each node may be a single processor system or a multicore system.

- Clustering is usually used to provide high-availability service.

- Clustering can be structured asymmetrically or symmetrically. In asymmetric clustering, one machine is in hot-standby mode while the other is running the applications. The hot-standby host machine does nothing but monitor the active server. If that server fails, the hot-standby host becomes the active server. In symmetric clustering, two or more hosts are running applications and are monitoring each other.

Trap or Exception

A trap (or an exception) is a software-generated interrupt caused either by an error (for example, division by zero or invalid memory access) or by a specific request from a user program that an operating-system service be performed.

Kernel mode

- supervisor mode, system mode, or privileged mode

- Mode bit is 0 (Zero).

User mode

- Non-privileged mode, Unsupervised mode

- Mode bit 1 (One)

The operating system is responsible for the following activities in connection with process management:

- Scheduling processes and threads on the CPUs

- Creating and deleting both user and system processes

- Suspending and resuming processes

- Providing mechanisms for process synchronization

- Providing mechanisms for process communication

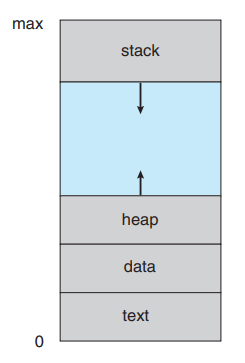

The operating system is responsible for the following activities in connection with memory management:

- Keeping track of which parts of memory are currently being used and who is using them

- Deciding which processes (or parts of processes) and data to move into and out of memory

- Allocating and deallocating memory space as needed

The operating system is responsible for the following activities in connection with file management:

- Creating and deleting files

- Creating and deleting directories to organize files

- Supporting primitives for manipulating files and directories

- Mapping files onto secondary storage

- Backing up files on stable (non-volatile) storage media

Cache Coherency

The situation becomes more complicated in a multiprocessor environment where, in addition to maintaining internal registers, each of the CPUs also contains a local cache. In such an environment, a copy of A may exist simultaneously in several caches. Since the various CPUs can all execute in parallel, we must make sure that an update to the value of A in one cache is immediately reflected in all other caches where A resides. This situation is called cache coherency.

Virtualization

Virtualization is a technology that allows operating systems to run as applications within other operating systems.

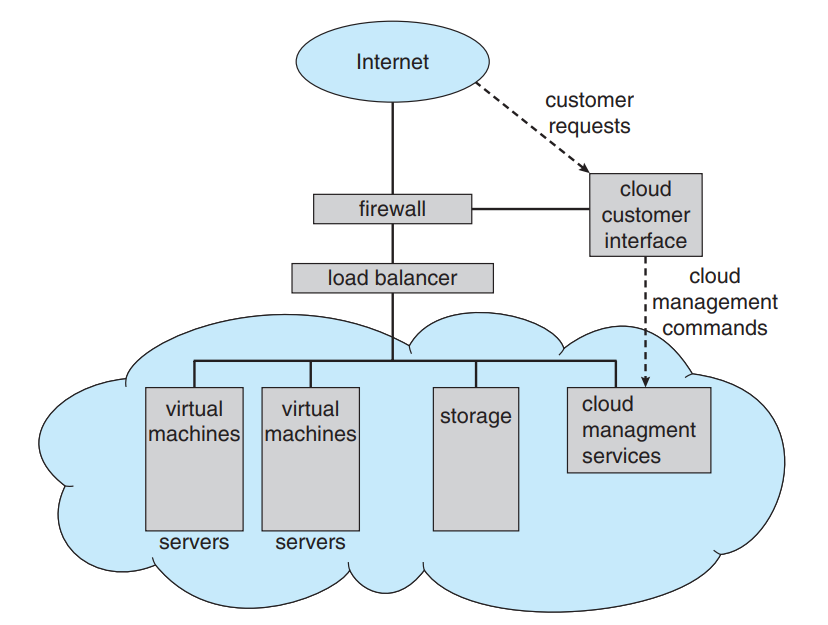

Cloud Computing

Cloud computing is a type of computing that delivers computing, storage, and even applications as a service across a network. In some ways, it’s a logical extension of virtualization, because it uses virtualization as a base for its functionality. For example, the Amazon Elastic Compute Cloud (EC2)facility has thousands of servers, millions of virtual machines, and petabytes of storage available for use by anyone on the Internet. Users pay per month based on how much of those resources they use.

There are actually many types of cloud computing, including the following:

Public cloud—a cloud available via the Internet to anyone willing to pay

for the services

42 Chapter 1 Introduction

- Private cloud—a cloud run by a company for that company’s own use

- Hybrid cloud—a cloud that includes both public and private cloud components

- Software as a service (SaaS)—one or more applications (such as word processors or spreadsheets) available via the Internet

- Platform as a service (PaaS)—a software stack ready for application use via the Internet (for example, a database server)

- Infrastructure as a service (IaaS)—servers or storage available over the Internet (for example, storage available for making backup copies of production data)

Finally, You have successfully completed the first chapter. Good job! Keep it up.

Chapter 2: Operating – System Structures

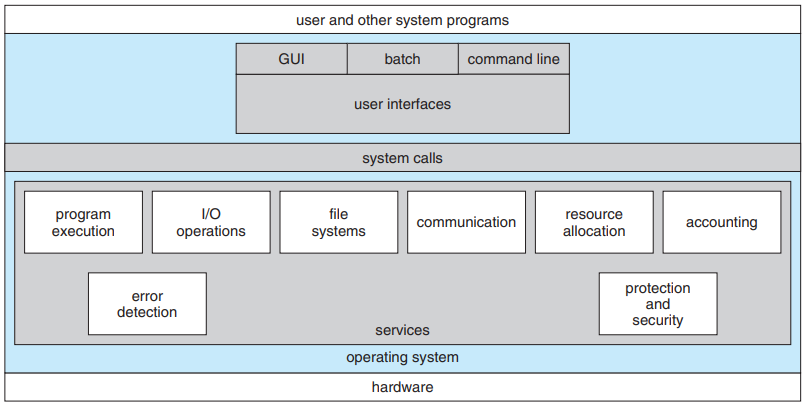

Operating-System Services

One set of operating system services provides functions that are helpful to the user.

- User interface

- Program execution

- I/O operations

- File-system manipulation

- Communications

- Error detection

- Resource allocation

- Accounting

- Protection and security

DTrace

DTrace is a facility that dynamically adds probes to a running system, both in user processes and in the kernel. These probes can be queried via the D programming language to determine an astonishing amount about the kernel, the system state, and process activities.

System Boot

The procedure of starting a computer by loading the kernel is known as booting the system. On most computer systems, a small piece of code known as the bootstrap program or bootstrap loader locates the kernel, loads it into main memory, and starts its execution. Some computer systems, such as PCs, use a two-step process in which a simple bootstrap loader fetches a more complex boot program from disk, which in turn loads the kernel.

When a CPU receives a reset event—for instance, when it is powered up or rebooted—the instruction register is loaded with a predefined memory location, and execution starts there. At that location is the initial bootstrap program. This program is in the form of read-only memory (ROM), because the RAM is in an unknown state at system startup. ROM is convenient because it needs no initialization and cannot easily be infected by a computer virus.

Finally, You have successfully completed the second chapter. Good job! Keep it up.

Chapter 3: Processes

Concept of Process

- A process is a program in execution.

- A process is the unit of work in a modern time-sharing system.

- The terms job and process are used almost interchangeably.

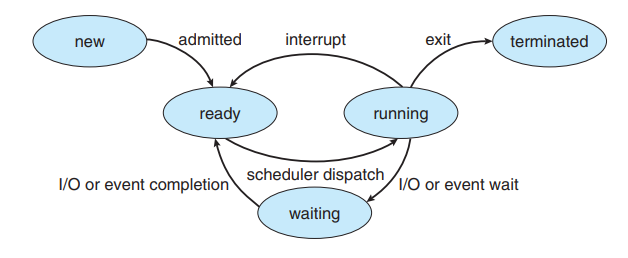

Process State

As a process executes, it changes state. The state of a process is defined in part

by the current activity of that process.

A process may be in one of the following states:

- New: The process is being created.

- Running: Instructions are being executed.

- Waiting: The process is waiting for some event to occur (such as an I/O completion or reception of a signal).

- Ready: The process is waiting to be assigned to a processor.

- Terminated: The process has finished execution.

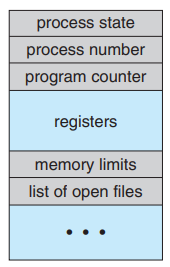

Process Control Block

Each process is represented in the operating system by a process control block (PCB)—also called a task control block. It contains many pieces of information associated with a specific process, including these:

- Process state: The state may be new, ready, running, waiting, halted, and so on.

- Program counter: The counter indicates the address of the next instruction to be executed for this process.

- CPU registers: The registers vary in number and type, depending on the computer architecture. They include accumulators, index registers, stack pointers, and general-purpose registers, plus any condition-code information. Along with the program counter, this state information must be saved when an interrupt occurs, to allow the process to be continued correctly afterward (Figure 3.4).

- CPU-scheduling information: This information includes a process priority, pointers to scheduling queues, and any other scheduling parameters. (Chapter 6 describes process scheduling.)

- Memory-management information: This information may include such items as the value of the base and limit registers and the page tables, or the segment tables, depending on the memory system used by the operating system (Chapter 8).

- Accounting information: This information includes the amount of CPU and real time used, time limits, account numbers, job or process numbers, and so on.

- I/O status information: This information includes the list of I/O devices allocated to the process, a list of open files, and so on.

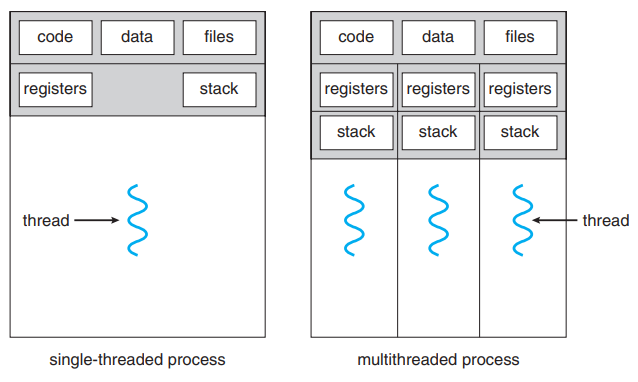

Threads

A process is running a word-processor program, a single thread of instructions is being executed. This single thread of control allows the process to perform only one task at a time. The user cannot simultaneously type in characters and run the spell checker within the same process.

- A thread is a basic unit of CPU utilization.

- It comprises a thread ID, a program counter, a register set, and a stack.

- It shares with other threads belonging to the same process its code section, data section, and other operating-system resources, such as open files and signals.

- A traditional (or heavyweight) process has a single thread of control. If a process has multiple threads of control, it can perform more than one task at a time.

- Most operating-system kernels are now multithreaded. Several threads operate in the kernel, and each thread performs a specific task, such as managing devices, managing memory, or interrupt handling.

The benefits of multithreaded programming can be broken down into four major categories:

- Responsiveness: Multithreading an interactive application may allow a program to continue running even if part of it is blocked or is performing a lengthy operation, thereby increasing responsiveness to the user.

- Resource sharing: threads share the memory and the resources of the process to which they belong by default.

- Economy: Allocating memory and resources for process creation is costly. Because threads share the resources of the process to which they belong, it is more economical to create and context-switch threads.

- Scalability: The benefits of multithreading can be even greater in a multiprocessor architecture, where threads may be running in parallel on different processing cores. A single-threaded process can run on only one processor, regardless how many are available.

Process Scheduling

- Process scheduler: the process scheduler selects an available process (possibly from a set of several available processes) for program execution on the CPU.

- Job Queue: As processes enter the system, they are put into a job queue, which consists of all processes in the system

- Ready Queue: The processes that are residing in main memory and are ready and waiting to execute are kept on a list called the ready queue.

- Device Queue: The list of processes waiting for a particular I/O device is called a device queue. Each device has its own device queue

Types of Parallelism

- Data parallelism: focuses on distributing subsets of the same data across multiple computing cores and performing the same operation on each core.

- Task parallelism: m involves distributing not data but tasks (threads) across multiple computing cores. Each thread is performing a unique operation. Different threads may be operating on the same data, or they may be operating on different data.

Thread Pools

The general idea behind a thread pool is to create a number of threads at process startup and place them into a pool, where they sit and wait for work. When a server receives a request, it awakens a thread from this pool—if one is available—and passes it the request for service. Once the thread completes its service, it returns to the pool and awaits more work. If the pool contains no available thread, the server waits until one becomes free.

Thread pools offer these benefits:

- Servicing a request with an existing thread is faster than waiting to create

a thread. - A thread pool limits the number of threads that exist at any one point.

This is particularly important on systems that cannot support a large

number of concurrent threads. - Separating the task to be performed from the mechanics of creating the

task allows us to use different strategies for running the task. For example,

the task could be scheduled to execute after a time delay or to execute

periodically

All the best!